The design of new financial technologies often focus on people with greater resources, overlooking the needs of households living on low and moderate incomes (LMI)—deepening inequity and limiting access to the next generation of financial tools and services.

Commonwealth’s Emerging Tech for All (ETA) initiative aims to integrate the needs, wants, and aspirations of people living on LMI into the design of new financial technologies as they are being developed. We partner with financial organizations, fintechs, and platform providers to research consumer perspectives and to pilot changes in design to better meet the needs and wants of customers living on LMI.

Key Insights

Commonwealth’s field test with ACU of Texas and Posh AI generated several key insights:

- Customers largely view chatbots as positive tools for improving their financial well-being.

- Credit building and budgeting top the list of chatbot support features sought by customers.

- Chatbots can build trust by being transparent about their features and limitations.

- Trust in chatbot or human’s accuracy hinges on the source and recency of the information.

Our 2024 national survey report, Generative AI and Emerging Technologies, provided actionable insights around larger trends in using generative AI, chatbots, and digital financial services. The report highlighted persistent technological uptake gaps between lower- and higher-income households—which could deepen inequality because of the limited access to opportunities and services.

To add more real-life color to the findings of the survey report, this field test brief provides a focused look at Associated Credit Union of Texas (ACU of Texas) customers living on LMI through in-depth interviews and a survey of 247 customers who had recently used ACU of Texas’ chatbot, Ava.

Financial chatbots like Ava may offer a key path to ensuring equitable access to financial services for populations without easy access to a physical bank branch—disproportionately households living on LMI. Through this partnership with ACU of Texas and conversational AI provider Posh, we continue refining our understanding of how chatbots can best serve these households, who stand to benefit enormously from emerging technologies.

Insights In-depth

Chatbots can be a tool for improving financial well-being.

In a survey of ACU of Texas customers who have used Ava, 57% of respondents somewhat or strongly agreed that the chatbot positively impacted their financial well-being. Only 12% disagreed that the chatbot had a positive impact. Focus group participants found that using the chatbot saved them time and effort. They were also able to multitask while using the chatbot—a feature that some valued highly.

The chatbot’s speed, ease, and flexibility allowed users to tackle more financial issues despite their busy schedules, likely contributing to the widespread agreement on its positive financial impact. The youngest survey respondents were most likely to feel that Ava positively impacted their financial well-being. We believe that chatbots can continue to add value for customers as the technology improves and as more of the banking population becomes comfortable with using them.

MEMBERS WHO AGREED THAT THE CHATBOT HAD A POSITIVE IMPACT ON THEIR FINANCIAL WELL-BEING:

Credit building and budgeting top the list of desired chatbot services.

Interview and focus group participants consistently expressed an interest in how Ava could better assist them with budgeting, setting financial goals, and building or repairing credit. These topics are highly relevant to the personal financial lives of individuals living on LMI, and they sometimes struggle to find the time or the needed guidance to feel confident in completing these tasks. Participants believed that the banking chatbot likely has a novel and valuable perspective on their finances, as it can see their account activity and balances and any loans or lines of credit with their bank. They would value a chatbot that provides data-driven insights with judgment-free delivery.

Participants would have liked Ava to proactively assist them in improving their finances with personalized budgeting help, financial advice, and credit counseling, in addition to responding to questions. Customers believe that their bank’s chatbot already has access to the data it would need to analyze spending habits, recognize recurring bill payments, or understand the financial impact of a home or car loan. A chatbot that could draw on these customer insights to proactively benefit the financial lives of its users would be a highly desirable and valuable new banking tool.

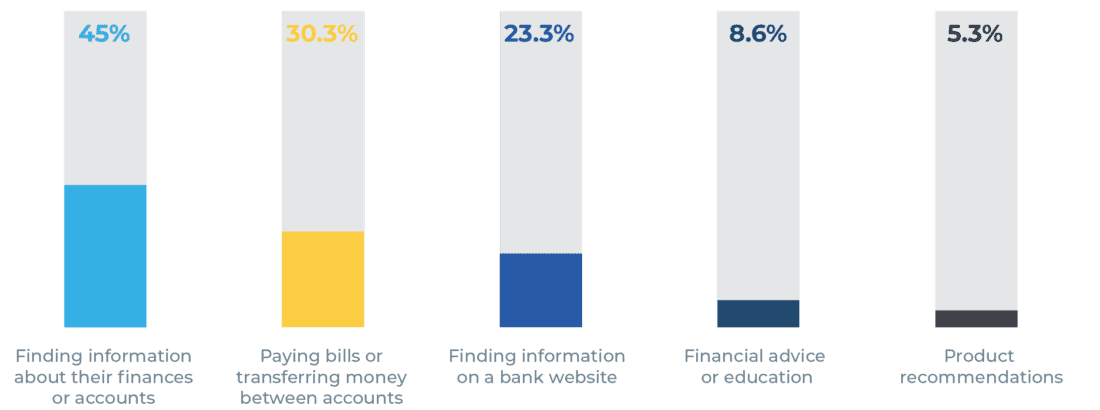

Chatbots are particularly well-positioned to provide personalized feedback because users overwhelmingly trust the tool to provide information without judgment. Users feel comfortable asking the chatbot for guidance with a sensitive financial topic without worrying about the shame or self-consciousness that might accompany that same conversation happening face-to-face with a human representative. In our survey, only 8.6% of respondents indicated previous use of a bank chatbot for financial advice or education, compared to 45% of respondents who had used a chatbot to find information about their finances or accounts. If chatbots can provide users with more relevant budgeting and credit advice, there are likely opportunities to increase chatbot engagement, meet customer needs, and build more earned trust.

Survey Respondents Have Used a Chatbot FoR:

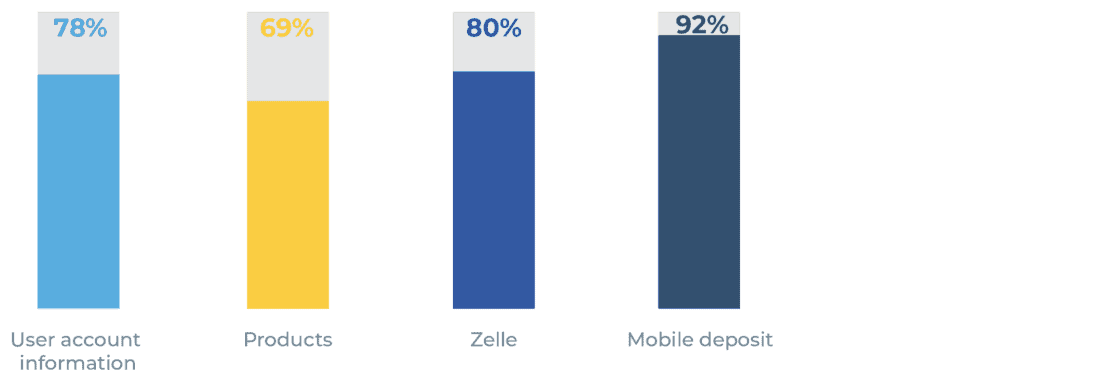

chatbot conversations containing each topic:

Chatbots are particularly well-positioned to provide personalized feedback because users overwhelmingly trust the tool to provide information without judgment.

Chatbots can build trust through transparency.

Research participants were split on trusting chatbots to treat them fairly and operate in their best interests. For some respondents, the chatbot’s lack of emotion increased their trust in the chatbot. They believed that a chatbot would be unable to show a particular bias or treat one customer differently from the next. For these customers, interaction with humans carried higher risks of variability. Respondents expressed concerns that a human service representative might be influenced by various factors, including prejudice or fluctuations in mood.

For other participants, the lack of emotions from a chatbot decreased their trust, as a chatbot would be programmed to only operate in one way. For these respondents, a human would be able to understand their unique circumstances better; they might be moved by empathy to go beyond the customer service a chatbot would be authorized to provide.

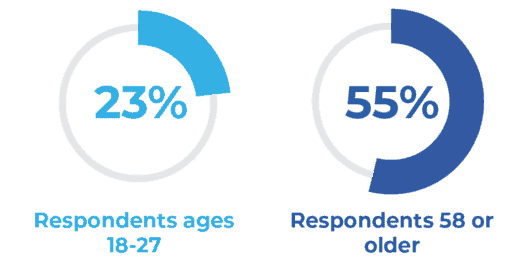

Our data found that older respondents are more likely to avoid using a banking chatbot because they prefer to speak with a human representative. While 23% of respondents aged 18 to 27 said that their preference for talking to an agent was their main reason for not using a chatbot, approximately 55% of respondents 58 or older indicated that they preferred to talk with a human.

Preference for human agent vs. chatbot

While people may always have differing opinions or feelings about chatbots, there may be an opportunity for designers and developers to use messaging that leans into the potential strengths of chatbots—for example, by emphasizing how the chatbot can provide consistent support without judgment.

The chatbot, in my opinion, which has no emotion, doesn’t know what’s [in my] best interest, it just goes off of numbers. Whereas if you speak to a person, they can hear the full story and be able to see what’s the best option for you.”

If I’m frustrated and I speak to somebody, you can tell in my voice, and [they might] get frustrated with me. The chatbot has no emotion, so it can’t [behave] any different.

Trust in chatbot or human’s accuracy hinges on the source and the timing.

Research participants had mixed views on whether they trusted chatbots or humans to provide them with accurate information. The rationales behind these conclusions always focused on the information’s source.

For some people, the chatbot was seen as having access to more information, or the most up-to-date information, and thus was trusted for accuracy. Others believed that human representatives were a more trustworthy provider of accurate information because of their ability to contextualize situations and ask follow-up questions. Some interviewees said they trusted chatbot and human accuracy equally, since they should be drawing on the same information to answer questions. Despite arriving at different answers, it was clear that all of our interviewees viewed the information source as the key factor in their decisions about trust in accuracy.

Focus group participants also considered the recency of a banking chatbot’s data when determining whether or not to trust the information provided. Outdated or inapplicable information decreased trust in the chatbot. Meanwhile, a chatbot that is clearly providing up-to-date information is seen as more trustworthy than a human who may not yet have access. Even for more advanced technologies using AI, participants warned that one cannot be absolutely sure that its information is correct. Technologically savvy respondents pointed out that ChatGPT’s technology, in particular, was trained on a selection of years-old information. Common amongst these participants was the idea that, regardless of the chatbot’s trustworthiness, they would like the ability to verify its information before making a decision. In fact, customers wanted the ability to find supporting evidence for information initially received from bank chatbots, bank websites, and human representatives alike.

For service providers, providing additional transparency around updates and software versions in an FAQ or another easily accessed location could increase trust in the information provided by chatbots.

Conclusion

The widespread implementation of chatbots presents a prime opportunity to create a positive impact on the financial health of millions of households living on LMI. This research illuminates chatbots’ immense potential to provide personalized guidance and support to communities living on lower incomes—if designed thoughtfully with their needs and perspectives in mind—especially since they are significantly less likely to have easy access to a local branch of their bank.

As advances in AI enable increasingly sophisticated chatbot capabilities, financial providers have a rare opportunity to ensure equitable access to banking services, budgeting help, credit counseling, and more. Armed with these insights around top-ranked features, trust, and data sources, providers can craft chatbot experiences that meet the needs and goals of households living on LMI—and ultimately support their financial well-being.

This work is supported by JPMorgan Chase & Co. The views and opinions expressed in the report are those of the authors and do not necessarily reflect the views and opinions of JPMorgan Chase & Co. or its affiliates.

All results, interpretations, and conclusions expressed are those of the authors alone and do not necessarily represent the views of ACU of Texas.

Users feel comfortable asking the chatbot for guidance with a sensitive financial topic without worrying about the shame or self-consciousness that might accompany that same conversation happening face-to-face with a human representative.