The financial industry is increasingly adopting new technologies such as chatbots powered by generative AI. However, their design often prioritizes the needs of consumers with higher incomes over those of households living on low and moderate incomes (LMI)—deepening inequality and limiting the potential of these new technologies to support financial security for all.

Commonwealth’s Emerging Tech for All (ETA) initiative aims to integrate the needs and aspirations of people living on LMI into the next generation of emerging technologies. We partner with financial organizations, fintechs, and platform providers implementing new technologies, research the needs and experiences of customers living on LMI, and conduct pilots to produce actionable insights for providers to meet those needs.

Key Insights

Commonwealth’s field test with MSUFCU and boost.ai generated several key insights:

- Trust in Chatbots: Customers living on LMI trust bank chatbots despite being skeptical of AI—and often do not associate these two things.

- Preferred Features: Customers prefer chatbots for routine tasks such as facilitating payments and transfers—highlighting a key opportunity to earn users’ trust for complex activities like providing personalized financial advice.

- Risk and Accuracy: Customers evaluate the risks and the importance of accuracy in banking activities when choosing between a chatbot or a human.

Commonwealth’s 2024 national survey report, Generative AI and Emerging Technologies, provided actionable insights around larger trends in using generative AI, chatbots, and digital financial services. The report highlighted persistent technological uptake gaps between lower- and higher-income households—gaps that limit the potential for new technologies to help address inequality (e.g., by providing equal access to services).

In addition to the national survey, we conducted a field test in partnership with Michigan State University Federal Credit Union (MSUFCU) and boost.ai, their chatbot provider. The field test focuses on MSUFCU members living on LMI through in-depth interviews and a survey of 273 participants who interacted with Fran, MSUFCU’s chatbot, during a two-month observation period. This research helped provide direct and timely feedback about the needs and preferences of customers who are actively engaging with a banking chatbot.

Through this field test, we continue refining our understanding of how chatbots can best serve households living on LMI, who disproportionately lack access to local bank branches. High-quality financial chatbots—thoughtfully designed with these households’ needs in mind—can offer equitable access to financial services and better customer experiences, and ultimately contribute to improved financial security.

Insights In-depth

Customers living on LMI trust bank chatbots despite being skeptical of AI—and often do not associate these two things.

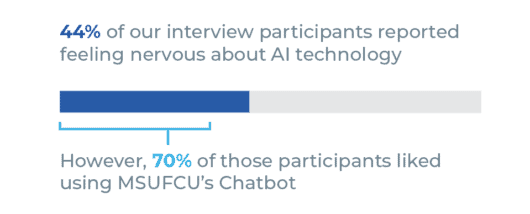

A growing skepticism has accompanied the recent surge in awareness around AI—almost 50% of our interview participants reported feeling nervous about AI technology. However, we found that these attitudes did not necessarily translate into distrust of chatbots: many people who reported feeling wary of AI in general viewed chatbots as convenient and useful. The proximity of Fran to live customer service may enhance this feeling of greater comfort, with around one-quarter of chatbot conversations handed over to a human agent at some point, according to MSUFCU data.

Chatbot USE among participants nervous about ai:

A key driver of this disconnect is that people do not necessarily associate chatbots (conversational AI) with the broader concept of AI, which includes many recent advances in machine learning. For example, in our national survey, only 29% of people reported using AI before, while 61% said that they had used chatbots. In the field test, only one group out of the three focus groups we conducted mentioned AI as a word that came to mind when thinking of chatbots. Most focus group participants instead mentioned convenience, 24/7, direct, and frustrating as words related to chatbots—highlighting the discrepancy between how people conceptualize AI versus how they conceptualize chatbots.

Specific chatbot applications could take advantage of this disconnect. Deemphasizing the AI aspect of chatbots and designing them to be more helpful and personal can motivate customers to trust chatbots more while avoiding any negative associations with the concept of AI. Although many in the business world are excited about the potential of AI, people living on LMI likely view it with significantly more skepticism as a new technology that has yet to demonstrate its direct value to them. Creating clear customer value is the frontier opportunity for financial providers using AI tools.

Survey respondents had some concerns about using chatbots, particularly regarding data privacy and security. When using a financial chatbot, 63% of survey respondents were concerned about security, while 53% were concerned about their privacy. Nearly four in 10 of respondents (39%) also cited these concerns as barriers to using new financial technologies.

Top Concerns about Chatbots:

Earning consumer trust and engagement with chatbots will take significant work. Providers should note that customers living on LMI often view chatbots as not necessarily tied to AI, and attempting to associate the two may increase distrust at this time.

Customers prefer chatbots for routine tasks such as facilitating payments and transfers—highlighting a key opportunity to earn users’ trust for complex activities like providing personalized financial advice.

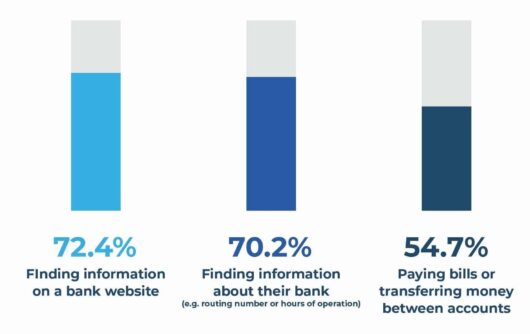

Interview and focus group participants had consistent preferences about the tasks for which they would engage either a human or a chatbot. Customers who use Fran preferred chatbots for routine activities such as finding information or making payments. Specifically, they preferred a chatbot over a human for finding information on a bank website (72.4%), finding information about their bank such as routing numbers or hours of operation (70.2%), and paying bills or transferring money between accounts (54.7%). The top three most commonly completed chatbot actions all involved finding information (about their own finances, about the bank, or information on the bank’s website), with paying bills or transferring money tied as the third most commonly completed action.

Survey Respondents Preferred A Chatbot over human help For:

In contrast, customers did not trust chatbots for complex tasks such as providing financial advice or education. Customers who use Fran preferred engaging with a human (80%) over a chatbot (15%) when receiving financial advice or education. Our national survey data also showed that respondents had significantly lower levels of trust in a chatbot than their bank to provide accurate information. Despite the fact that the sources of this information are the same in the banking context (i.e., the bank provides the information for the chatbot’s answers), respondents trust chatbots less to deliver the information accurately.

Financial advice and education are services that customers living on LMI would like to receive, but MSUFCU members believe that these tasks require accuracy and sensitivity to context that chatbots cannot currently be trusted to provide. Interview and focus group participants explained their preference for human representatives to help with these types of more personal tasks (e.g., advice on credit building or budgeting) because of their greater ability to understand a situation through conversation, empathize with customers, and offer more flexibility in problem-solving.

When asked about areas for improved chatbot functionality in the future, interview and focus group participants pointed to transfers and bill payments—two of their most commonly completed online banking actions. Customers who use Fran are very comfortable completing simple tasks like these, which we believe underlines an opportunity to build on that trust and deepen chatbot engagement by increasing capacity for more complex tasks.

Interview and focus group participants already value chatbots’ greater convenience, speed, and availability for straightforward tasks like finding information and moving money. Providers can build on this foundation to increase their customers’ trust and engagement with improved chatbots that can execute more complex tasks. Doing so would expand the availability of personalized banking services in areas like budgeting, credit building, and financial education—and better serve households living on LMI.

Customers evaluate the risks and the importance of accuracy in banking activities when choosing between a chatbot or a human.

Creating clear customer value is the frontier opportunity for financial providers using AI tools.

Our conversations with interview and focus group participants consistently surfaced a clear distinction between actions that customers would entrust to a chatbot and tasks for which they would engage a human representative. Customers prefer to use a chatbot to resolve simple, straightforward, and commonly experienced actions with easily defined outcomes. Conversely, participants preferred speaking with a human representative when they knew their issues were more complex, potentially involved higher stakes or risk to their finances, or had fewer certain outcomes. Recent research from boost.ai suggests that these latter factors, such as risk, the need for personalization, and sensitivity to trust, are most significant, rather than complexity being an issue in itself.

Our national survey also found that accuracy is important to this distinction between engagement with chatbots and humans, with respondents trusting bank chatbots significantly less than banks (i.e., human representatives) in general to provide accurate information. In our interviews and focus group conversations, chatbot users were not concerned about the accuracy of a chatbot when they only needed help resolving a straightforward task. However, when higher degrees of accuracy are required for riskier or more personalized banking actions (e.g., applying for a loan or asking for financial advice), this gap in trust makes bank customers more likely to engage with a human representative over a chatbot.

As noted above, having established trust in chatbots’ perceived strengths—greater convenience, speed, and availability for routine tasks—providers could then improve functionality for more “complex” activities such as providing personalized advice on budgeting or credit building. Banking customers could then feel comfortable increasing their engagement with the technology solution, allowing banks to provide high-quality service to more customers and free up human capacity.

Conclusion

The widespread implementation of chatbots presents a prime opportunity to create a positive impact on the financial health of millions of households living on LMI. This research shows that customers living on LMI already trust chatbots with simple financial tasks, placing high value on the technology’s speed and convenience. We believe that providers can build on that trust to enhance their chatbots’ capabilities for more sophisticated services that could contribute to financial security, such as providing guidance on budgeting or credit building.

Households living on lower incomes are significantly less likely to have easy access to a local branch of their bank, and could benefit enormously from services provided by chatbots—if designed thoughtfully with their needs and perspectives in mind. As AI advances accelerate in real time, financial providers have a powerful opportunity to ensure equitable access to banking services, budgeting help, credit counseling, and more. Armed with these insights around preferences, perceptions, and trust, providers can craft chatbot experiences that meet the needs and goals of households living on LMI—and ultimately support their financial well-being.

This work is supported by JPMorgan Chase & Co. The views and opinions expressed in the report are those of the authors and do not necessarily reflect the views and opinions of JPMorgan Chase & Co. or its affiliates.

Customers prefer to use a chatbot to resolve simple, straightforward, and commonly experienced actions with easily defined outcomes.